Learn how to use the Augmented Faces feature in your own apps.

Build and run the sample app

To build and run the AugmentedFaces Java app:

Open Android Studio version 3.1 or greater. It is recommended to use a physical device (and not the Android Emulator) to work with Augmented Faces. The device should be connected to the development machine via USB. See the Android quickstart for detailed steps.

Import the AugmentedFaces Java sample into your project.

In Android Studio, click Run

. Then,

choose your device as the deployment target and click OK to launch the

sample app on your device.

. Then,

choose your device as the deployment target and click OK to launch the

sample app on your device.Click Approve to give the camera access to the sample app.

The app should open the front camera and immediately track your face in the camera feed. It should place images of fox ears over both sides of your forehead, and place a fox nose over your own nose.

Using Augmented Faces in Sceneform

Import assets into Sceneform

Make sure that assets you use for Augmented Faces are scaled and positioned correctly. For tips and practices, refer to Creating Assets for Augmented Faces.

To apply assets such as textures and 3D models to an augmented face mesh in Sceneform, first import the assets.

At runtime, use ModelRenderable.Builder

to load the *.sfb models, and use the Texture.Builder

to load a texture for the face.

// To ensure that the asset doesn't cast or receive shadows in the scene,

// ensure that setShadowCaster and setShadowReceiver are both set to false.

ModelRenderable.builder()

.setSource(this, R.raw.fox_face)

.build()

.thenAccept(

modelRenderable -> {

faceRegionsRenderable = modelRenderable;

modelRenderable.setShadowCaster(false);

modelRenderable.setShadowReceiver(false);

});

// Load the face mesh texture.

Texture.builder()

.setSource(this, R.drawable.fox_face_mesh_texture)

.build()

.thenAccept(texture -> faceMeshTexture = texture);

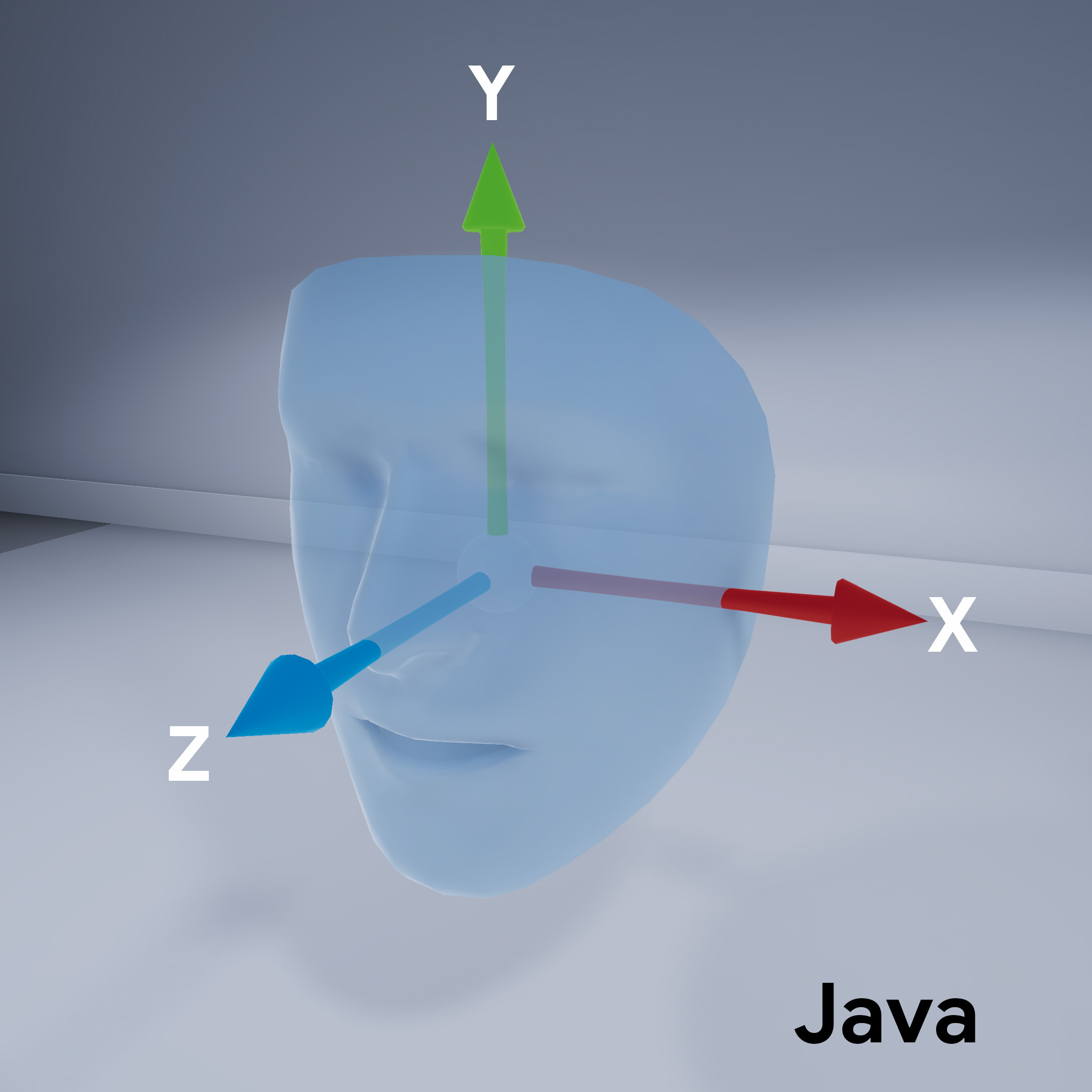

Face mesh orientation

Note the orientation of the face mesh for Sceneform:

Configure the ARCore session

Augmented Faces requires the ARCore session to be configured to use the front-facing (selfie) camera and enable face mesh support. To do this in Sceneform, extend the ARfragment class, and override the configuration:

@Override

protected Set<Session.Feature> getSessionFeatures() {

return EnumSet.of(Session.Feature.FRONT_CAMERA);

}

@Override

protected Config getSessionConfiguration(Session session) {

Config config = new Config(session);

config.setAugmentedFaceMode(AugmentedFaceMode.MESH3D);

return config;

}

Refer to this subclassed ArFragment class in your activity layout.

Get access to the detected face

The AugmentedFace class extends the Trackable class. In your app's activity,

use AugmentedFace to get access to the detected face by calling it from the addOnUpdateListener()

method.

// Get list of detected faces.

Collection<AugmentedFace> faceList = session.getAllTrackables(AugmentedFace.class);

Render the effect for the face

Rendering the effect involves these steps:

for (AugmentedFace face : faceList) {

// Create a face node and add it to the scene.

AugmentedFaceNode faceNode = new AugmentedFaceNode(face);

faceNode.setParent(scene);

// Overlay the 3D assets on the face.

faceNode.setFaceRegionsRenderable(faceRegionsRenderable);

// Overlay a texture on the face.

faceNode.setFaceMeshTexture(faceMeshTexture);

…

}